In our Process we transfer an X amount of volume to a new plate for measurement with the Frida Reader and then we perform a In-Situ Normalization in that normalization plate.

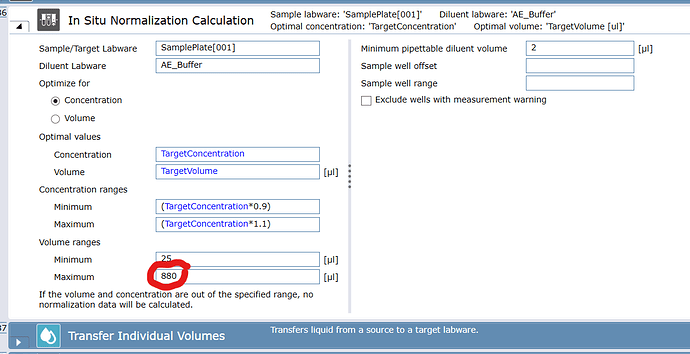

We have noticed that for our Neg control (NTC) the Frida Reader will sometimes give negative concentration. Specifically for samples with negative measurements the normalization is messed up and the maximum amount of volume from the In-Situ Normalization function is added to the well (see attached picture). For the NTC this isn’t too bad, but we have seen it as well that a blank buffer was left too long on the deck and then this resulted in negative concentration for a lot of samples as well and thereby a lot of over diluted material in the plate.

I assume this is a bug in the Fluent Control software and I’ve also this behavior when the OD260/230 outcome was a negative number and the concentration was positive. This is a greater concern as it looks like the sample is ‘fine’ but still it result in over-dilution of samples.

For negative concentration values I have build in a check to see if a sample is negative and then in the script update the value to a low-positive number. For now this is a reasonable workaround. I have not tackled the negative OD260/230 yet as we just found out.

As an additional course of action we have implemented a check in the script after the measurement of blank buffer with the Frida Reader that compares the results to specifics required for the blank buffer that is used in the process. This solved the issue if a blank has gone off (or when a wrong blank buffer was loaded… this was a nice bonus).

Is there any one with the same issue, and if so… how did you solve it?