Of course, it would be as simple as telling people to add tool="p20" to lh.pick_up_tips, but that would break the dream of hardware agnosticity I am hoping for: a single frontend (i.e. protocol syntax) for all LH robots.

A note: I chose tool instead of pipette there on purpose, because not all Liquid Handler tools will be pipettes (e.g. syringes or futuristic ultrasonic dispensers), but they will always be tools.

I would actually prefer to overload the more agnostic channels argument, instead of succumbing to the religious backend_kwargs (examples of this idea below).

I think we may be close to this scenario.

From my perspective as a labperson and hardware developer, PLR shows some traces of Hamilton-specific hardware in its architechture (which could be expected).

A few of these traces have made their way into the code’s abstractions, and may require some amount of rethinking/refactoring to remove.

For example this sentence in LiquidHandler.pick_up_tips:

use_channels: List of channels to use. Index from front to back.

This, I promise, is not obvious at all unless you’ve seen pictures of a Hamilton robot, which has its channels arranged “front to back”.

I see one compromising path to approach this issue.

Overloading channels

Bringing back my previous example, if there were 3 pipettes and 10 channels in total, the user could “select” the tool implicitly, by recalling the integer IDs of the channels in each tool (presumably defined when instantiating the backend) and passing them to the atomic actions in LH.

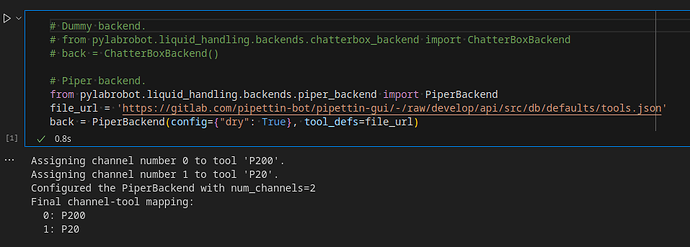

For example, let there be a custom backend with two pipette tools:

# Single-channel

gil_p20 = Pipette(channels=[0],

model="gilson_p20_adapter.v1")

# Multi-channel

ot2_gen2_8ch = Pipette(channels=[1,2,3,4,5,6,7,8],

channel_layout="front_to_back", # Maybe

model="ot2_multichannel_8.v2") # A recognizable ID?

# Example using "tools" instead of "num_channels".

back = toolchanging_backend(tools=[gil_p20, ot2_gen2_8ch])

Note that because Hamilton robots have fixed hardware, their setup and protocols would remain exactly as they were.

The advantage is that no user-facing changes are needed, and protocols can be kept hardware-agnostic. The disadvantage is that the channels syntax is opaque, unless we did something about it.

Front-end example:

# The backend may decide if it wants to guess the tool or throw an error.

await lh.pick_up_tips(tiprack["A1"])

# Or one might just tell it which pipette to use by the channel ID.

await lh.pick_up_tips(tiprack["A1"], channels=[0])

# Or by using the tool/channels object, which is les opaque.

await lh.pick_up_tips(tiprack["A1:A8"], channels=ot2_gen2_8ch[:])

What do you think? Is this something you’d want to implement?

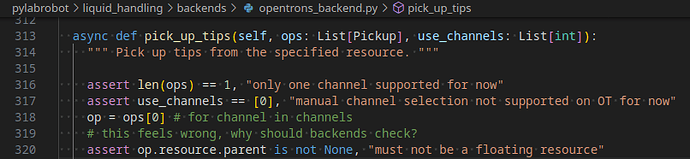

This idea would also provide a way to remove the following limitation I came across in the OT backend:

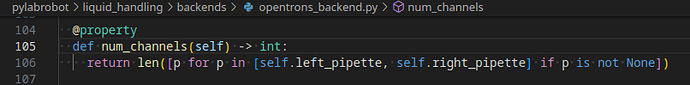

I realise just now that the number of channels is already inferred in the way I would expect for this idea, but its also strange given the above.

This would give length 9 for a 1+8 configuration?

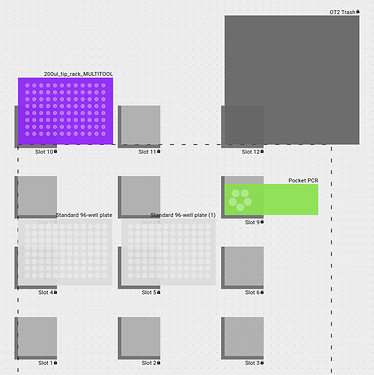

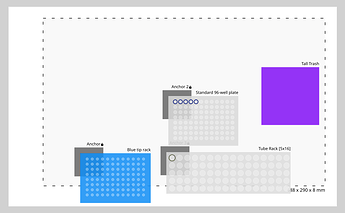

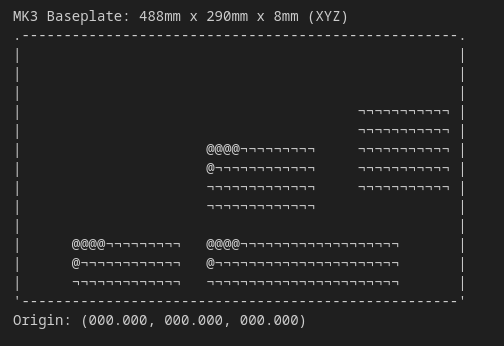

![]() but I was not sure if the TubeRack I had in mind would have ended up looking like your original, and I think it didn’t in the end.

but I was not sure if the TubeRack I had in mind would have ended up looking like your original, and I think it didn’t in the end.