The other day I upgraded to opentrons version 6.3.1 from version 6.2.1, and I was surprised to see a few “features” implemented.

All of these issues have to do with how calibrations are handled. When loading a protocol on the protocols tab, even if you’ve loaded the same protocol before, labware offsets are not saved.

Example: You load a media exchange protocol, you calibrate the labware offsets.

Then you load the exact same protocol again: no offsets are present.

If you run a similar protocol, but not the same one, with labware in the same positions: no offsets are present.

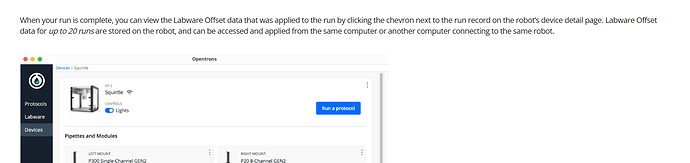

The only way to actually apply labware offsets from a previous run is to go to the robot tab and scroll down, clicking on a protocol that has offset data and pressing “re-run”

Funny enough, downgrading to 6.2.1 fixes this

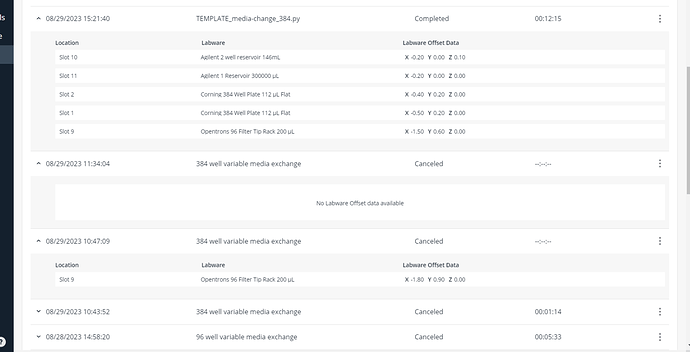

Previously in version 6.2.1, when loading a protocol with a labware in the same slot, no matter the protocol, labware offsets would be applied, which makes sense. If the same labware is in the same slot, why would the offset change between protocols? To make this more mind boggling; only the previous 20 runs are saved!

Source: How Labware Offsets work on the OT-2

In my opinion: They don’t

So let’s pretend that a scientist runs 20 runs (or messes up and cancels a few of those too, because canceled runs are included). Their labware offset data for run number 21 is gone!

Annoyingly, opentrons doesn’t mention this in their patch notes for 6.3.1.

Opentrons seems to constantly be messing around with how they calibrate and it’s driving me insane. Why can’t there we just have a simple dictionary that looks like Robot[“Pipette S/N”][“Labware”][“Slot”] = x y z offsets? I would love to have access to such a dictionary, since, afterall, the spirit of opentrons is open source.

In conclusion: Either there’s some kind of bug opentrons needs to address here, or opentrons is taking a step backwards to the dark days of OT2 v5.0. I’d seriously love a response from the team (maybe @ethan_jones ?) about why calibrations can’t just be a simple dictionary like I mention above, and what the thought process is about changing how calibrations work all the time?

PS: (Personally, I found how it was in v4.7 to be the best, but that version of the app lacks some other nice features that have since been implemented. We even have 3 machines still on that version)